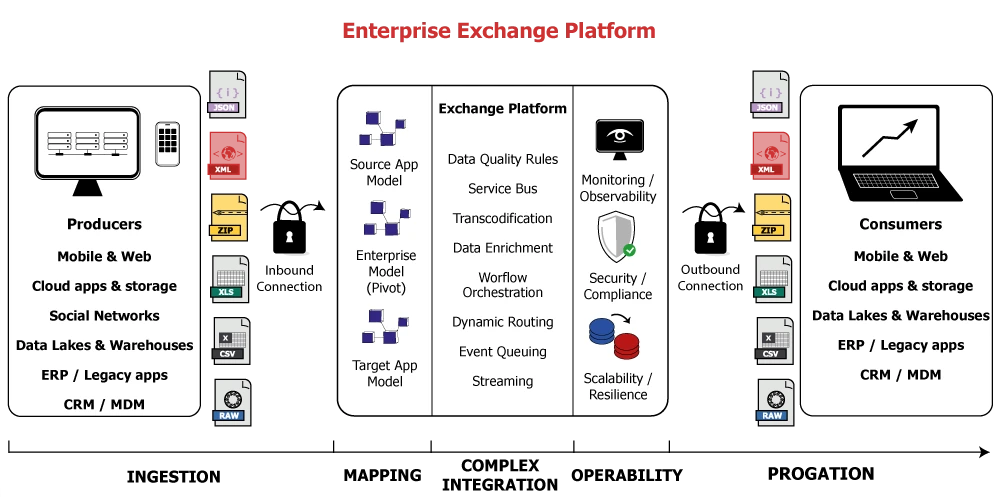

More and more companies are opting for a streamlined data exchange approach: choosing a single exchange platform solution, with two objectives:

- Pooling skills and resources.

- Building exchanges on pivot formats and offering data consumption services to internal and external consumers (exposure, publication…).

Contact our experts now and discover how we can help you leverage best practices.